In 1967, late one night in the eucalyptus-scented hills of Palo Alto, John Chowning stumbled across what would become one of the most profound developments in computer music. “It was a discovery of the ear,” says Chowning, who gave a lecture and concert on Oct. 11 sponsored by the Media Lab and the MIT Center for Art, Science & Technology (CAST). While experimenting with extreme vibrato in Stanford’s Artificial Intelligence Lab, he found that once the frequency passed out the range of human perception — far beyond what any cellist or opera singer could ever dream of producing — the vibrato effect disappeared and a completely new tone materialized.

What Chowning discovered was FM synthesis: a simple yet elegant way of manipulating a basic waveform to produce a potpourri of new and complex sounds — from sci-fi warbles to metallic beats. Frequency modulation (FM) synthesis works, in essence, by using one sound to control the frequency of another sound; the relationship of these two sounds determines whether or not the result will be harmonic. Chowning’s classically trained ear had sounded out a phenomenon whose mathematical rationale was subsequently confirmed by his colleagues in physics, and would populate the aural landscape with the kind of cyborg sounds that gave the 1980s its musical identity.

Chowning licensed and patented his invention to a little known Japanese company called Yamaha when no American manufacturers were interested. While the existing synthesizers on the market cost about as much as a car, Yamaha had developed an effective yet inexpensive product. In 1983, Yamaha released the DX-7, based on Chowning’s FM synthesis algorithm — and the rest is history. The patent would become one of Stanford’s most lucrative, surpassed only by the technology for gene-splicing and an upstart called Google.

With its user-friendly interface, the DX-7 gave musicians an entrée into the world of programmers, opening up a new palette of possibility. Part of a rising tide of technological developments — such as the introduction of personal computers and the musical lingua franca MIDI — FM synthesis helped deliver digital music from the laboratory to the masses.

The early dream of computer music

The prelude to Chowning’s work was the research of scientists such as Jean-Claude Risset and Max Mathews at AT&T’s Bell Telephone Laboratories in the 1950s and ’60s. These men were the early anatomists of sound, seeking to uncover the inner workings of its structure and perception. At the heart of these investigations was a simple dream: that any kind of sound in the world could be created out of 1s and 0s, the new utopian language of code. Music, for the first time, would be freed from the constraints of actual instruments.

As Mathews wrote in the liner notes of Music from Mathematics, the first recording of computer music, “the musical universe is now circumscribed only by man’s perceptions and creativity.”

“That generation,” says Tod Machover, the Muriel R. Cooper Professor of Music and Media at the MIT Media Lab, “was the first to look at the computer as a medium on its own.” But both the unwieldy, expensive equipment and the clumsiness of the resulting sounds — two problems that Chowning helped surmount — inhibited these early efforts (by Chowning’s calculations, as he noted in his lecture, the Lab’s bulky IBM 7090 would be worth approximately nine cents today). But by the mid-1960s, the research had progressed to the point where scientists could begin to sculpt the mechanical bleeps and bloops into something of musical value.

Frequency modulation played a big part. Manipulating the frequency unlocked the secrets of timbre, that most mysterious of sonic qualities. In reproducing timbre — the distinctive soul of a note — Chowning was like a puppeteer bringing his marionette to life. The effects of FM synthesis conveyed “a very human kind of irregularity,” Machover says.

The future of music

Today, the various — and often unexpected — applications of FM synthesis are omnipresent, integrated so completely into everyday life that we often take them for granted– a ringing cellphone, for instance. Yet while digital technologies became more and more pervasive, Chowning’s hearing began to worsen and he slowly withdrew from the field. For a composer whose work engaged the most subtle and granular of sonorities, this hearing loss was devastating.

Now, thanks to a new hearing aid, Chowning is back on the scene. The event at MIT on Thursday — titled “Sound Synthesis and Perception: Composing from the Inside Out” — marked the East Coast premiere of his new piece Voices featuring his wife, the soprano Maureen Chowning, and an interactive computer using the programming language MaxMSP. Chowning sees the piece as a kind of rebuttal to those who once doubted the “anachronistic humanists” who feared the numbing encroachments of the computer. In Voices, he says, the “seemingly inhuman machine is being used to accompany the most human of all instruments, the singing voice.” The piece also sums up a lifetime of Chowning’s musical preoccupations, his innovations in our understanding of sound and its perception, and the far-reaching aesthetic possibilities in the dialogues between man and machine.

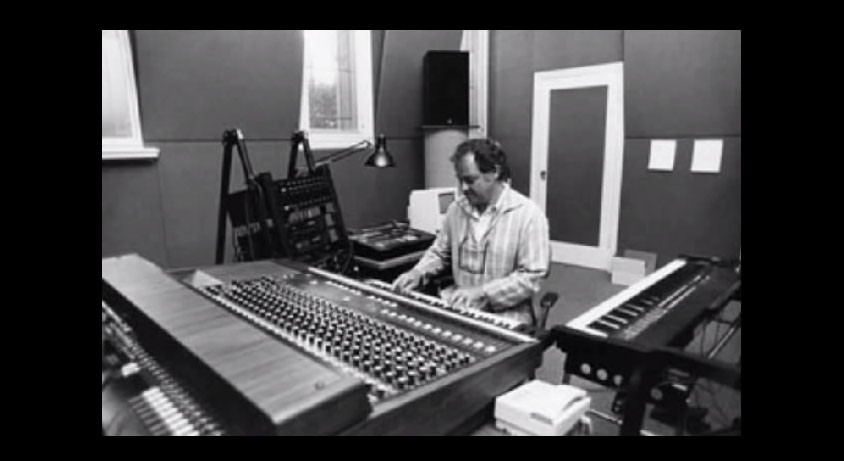

At MIT, Chowning enjoyed meeting the next generation of scientists, programmers and composers, glimpsing into the future of music. “The machinery is no longer the limit,” he announced to the crowd. Indeed, MIT has its own rich history of innovation in the field, as embodied by figures such as Professor Emeritus Barry Vercoe, who pioneered the creation of synthetic music at the Experimental Music Studio in the 1970s before going on to head the Media Lab’s Music, Mind, and Machine group. “MIT is in many ways a unique institution,” Chowning says, where, “cutting edge technology interacts with highly developed artistic sensibilities.” In the Media Lab, Chowning saw the dreams of his generation pushed forward. One thing, in his mind, is clear: “music has humanized the computer.”